Introduction

While the previous decade(s) focused on adopting SaaS, PaaS, or IaaS to companies all over the world as part of an organization’s IT strategy, the current decades exposed a lot of risks in these strategies, as I mentioned in my previous blog. While large enterprises can afford to have their IT departments manage their own data centers and adopt forms of (hybrid) cloud strategies, this is a lot harder for smaller companies that have business across the globe. Adopting Cloud services can help reduce costs (especially CAPEX), be less reliant on an increasing shortage of IT experts, and help create focus on the value creation of a business. There are risks when adopting online services with proper consideration of multiple factors, of which ‘Digital Sovereignty’ is one. In this blog, I would like to address some of the risks and provide some methods to limit/mitigate these risks.

No cloud is ever in the same place

Understanding the impact of adopting and relying on cloud services for core business processes, and understanding the risk of losing the authority and capacity to manage these digital environments, is becoming increasingly important. The emphasis on understanding and considering Digital Sovereignty as part of a business’s IT strategy is crucial. Questions such as ‘in which country does the (parent) company reside?’, ‘What technologies do those companies rely on and can interruptions there impact our business?’ are important to consider.

No, this does not mean you need to run away from the ‘Big 3’ cloud providers (AWS, Azure, Google Cloud). The datacenters in Europe still fall under European regulations, and while there are European alternatives, they’re often less ‘feature-rich’. However, Native European alternatives such as OVHcloud, IONOS, or Scaleway could provide interesting alternatives depending on your needs. Adopting a multi- or hybrid-cloud strategy can help reduce the risks that ‘Digital Sovereignty’ attempts to emphasize.

So many clouds

But doesn't adopting multiple clouds just make things more complex? Well... you have a point there. Given the skills shortage I mentioned in the introduction, a multi-cloud strategy doesn't directly help with that. However, this extra complexity can be reduced by choosing the right architecture, working methods, and technologies. There are countless good resources available on multi-cloud architectures, such as documentation, blogs, books, and research.

When adopting a multi-cloud strategy to mitigate risks (related to Digital Sovereignty), it is important to understand your current workloads to determine which cloud providers fit within the needs of the company. What type of architecture is my current environment using, and how does this map to a multi-cloud environment? Understand the type of workloads that are run, how data needs to be handled for workloads, required response times (both in transit and at rest) for your workloads, and validate that the cloud providers can meet those requirements.

Picking one of the big 3 in combination with a European cloud provider such as Scaleway would help mitigate risks related to having your entire workload running at a single cloud provider. It prevents vendor lock-in. For example, N-tier or microservice architectures can typically be mapped on a multi-cloud setup very well, especially when using the correct practices and tools. I am not going to start a lecture about these practices (it will take me several blogs to do that); there are many sources out there related to DevOps, DevSecOps, and Platform Engineering. This can be daunting, understanding your company’s architecture and core business applications, how these can be mapped onto the cloud, and getting the right help to adopt the practices as mentioned earlier correctly requires (different) skills.

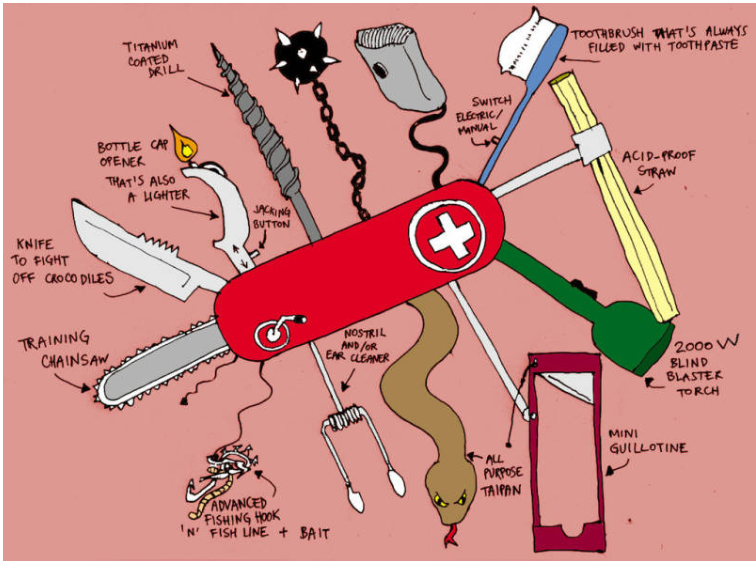

A good tool improves the way you Geek

I already mentioned something about the IT landscape being large with a growing set of disciplines. In addition to disciplines, there are many tools out there to support these different disciplines. Assuming proper Dev(Sec)Ops/Platform Engineering practices are adopted, there are many tools available that can help. As a side note, there are many European solution providers available; an interesting starting place to take a look would be here. Picking the right tools can help mitigate risks related to both skills and complexity. Typically, I like to suggest open source tools for many of the DevOps practices.

There are many open-source tools available (also, engineers tend to like it when companies adopt open-source, automatic buy-in). IaC and CaC tools that can handle heterogeneity, such as Pulumi or Terraform/OpenTofu and Ansible, Puppet, or Chef, help with a multi-cloud strategy. Apache Allura, Gitea, or GitLab (I’m a fan of Gitlab, but some of the cooler features require a license) for SCM and CI/CD. In case of containerized workloads (especially if you want to adopt cloud-native design patterns), the Cloud Native Foundation provides a nice overview. The use cases for each of the tools are already explained on the page and do not require additional explanation here.

From my perspective, you want to automate a typical DTAP setup as much as possible with tools that provide as much flexibility as possible (limit/prevent vendor lock-in). Limit DIH, focus on (open source or European) toolstacks that help limit some of the risks related to Digital Sovereignty. Finally, consider tools that increase the chance of finding skilled engineers who know how to use those tools. Picking the correct tools for your company’s environment requires knowledge, which requires vision and a good understanding of your company’s environment.

rm -rf /

When adopting cloud services, understanding where your data is in transit, stored and processed has always been important topics, partly due to compliance regulations or security considerations. While many cloud providers provide storage in your country, or on your continent, nations typically expect you to handle and store (sensitive) data in a specific way if you serve customers in their nation. Not to mention your company’s sensitive data, such as research and marketing.

Let’s start with data at rest. In case of off-premise backups, encrypting the data before storing it in the cloud is typically a good way to ensure that during transit of the data to an off-site backup location, such as the cloud, it cannot be compromised. Assuming you are using quantum-safe algorithms, of course. An on-premise or Cloud Hardware Security Module can be used to encrypt the data. This makes decrypting the data hard, but there are ways to handle this. On that note, many cloud providers provide the ability to integrate your key material generated with your own HSM with Key Management Services in the cloud. AWS nicely explains that process here.

This brings us to handling secrets (SSH keys, tokens, certificates, asymmetric key material, passwords, etc.). Secret management is an important topic, especially for managing data in cloud environments. Again, there are many cloud offerings for this; typically, they integrate very well within the Cloud that is offering the service. However, in a multi-cloud setup, this might require a bit more thinking. There are many (open source) solutions available that can help with this, such as HashiCorp Vault (although they did change their license in 2023) or OpenBao. There are additional topics to consider, such as certificate management for securing your data in transit. This could be done via Vault, but there are other open source alternatives, such as OpenXPKI or Dogtag.

Wait... there are consequences!

With the introduction of NIS2 in Europe in 2023, not having your cybersecurity in order can have a severe impact. While the adoption (Cyberbeveiligingswet) in the Netherlands is delayed, companies not adhering to it can risk penalties or tariffs. Like I mentioned in the previous blog, it’s crucial for a company to properly handle cybersecurity when developing software, installing, and configuring your company’s (cloud) infrastructure. There should be practices and tools in place that support this. Some tools to help are provided by governments, such as guidelines, the basic cyber resilience scan, and principles that should be followed.

There are several important aspects to consider, some of which have already been mentioned, such as data security, and some are mentioned in the links of the previous paragraphs. In terms of prevention, the implementation of benchmarks/guides provided by organisations such as the Center of Internet Security (CIS) or the DoD is typically good practice for hardening your infrastructure. Benchmarks/Guides such as CIS or Security Technical Implementation Guides (STIGs) for Operating Systems, Network Equipment, Web servers, Cloud, and much more are provided by these organizations. There are many tools available that allow (automated) scanning to verify that the expected configuration is in place. For CIS or STIG, this can be done with tools such as OpenVAS, OpenSCAP, or Nessus.

While scanning the infrastructure is important, engraining practices such as linting, code reviews, SAST, and audit tools before code even goes into production are also good methods to mitigate security risks. Most IDEs provide or allow the integration of linters, so developers get feedback on when writing code directly. When integrating this with a VCS, linting could be enforced via GIT hooks. Considering the integration capabilities of a VCS, this typically allows Static Application Security Testing (SAST) and auditing tools to be integrated during the build process as well.

Clouds typically provide auditing tools that allow scanning of resources in the cloud, think of AWS Audit Manager or Azure Defender for Cloud. These tools can provide integration of benchmarks from CIS or STIG. Always set up some kind of centralized logging and auditing regardless of the type of environment, albeit on-premise, hybrid, single, or multi-cloud. And always store audit logs in an immutable location. So far, I have hinted at a couple of practices, but there are many more, such as IAM, Cyber Incident Management, and Awareness training, all mentioned as part of the NIS2 regulations. From my perspective, these items are typically overlooked, poorly adopted, and poorly implemented. This can create significant risks to your company, but also the ‘Digital Sovereignty’ of your Nation and Europe. With the introduction of NIS2, in addition to the security risks for your company, there can also be legal consequences for not properly addressing cybersecurity.

The council of three (or maybe four, but we need ‘n / 2 + 1’ for majority)

Right, complexity, how did I make this less complex? I discussed multicloud, different strategies and architectures, a series of tools for different use cases, handling your data in the cloud, and the impact of cybersecurity. It still looks like you need that ‘duizendpoot’ I mentioned in the previous blog, the IT ‘guy’ in the basement who knows and can fix everything. Considering they don’t exist, there are some key roles in a company that should be looked at.

A person who can properly translate the company’s mission and vision into an IT strategy for the company, typically a CTO. The person responsible for this role should have some technical knowledge and a general understanding of technologies and domains within the IT landscape. More important is the understanding of the domain(s) the company is operating in and how IT solutions can help in that domain. The person who determines the IT strategy is the one who can be the driving or limiting force for your company.

The second role I would consider to be crucial is a solutions architect who can effectively translate the strategic goals into an architecture that meets (or will meet) these goals. Having a proper understanding of how your business services are mapped onto your software and infrastructure is crucial to make any sane choices moving forward, whether that’s on-premise or leveraging a (multi/hybrid) cloud setup. The persons fulfilling these two roles are not required to know everything; that would be impossible. But the combination of these roles needs to have at a minimum the knowledge to translate the business requirements into a strategy that helps any additional roles make informed choices that help your company move forward to meet those goals.

It helps determine which roles to hire or outsource. For example, a cloud engineer/architect requires this information to determine if moving to a cloud setup can help meet the business requirements, and which cloud strategy best fits. A single, multi, or hybrid cloud, and whether European cloud provider(s) meet those requirements. This subsequently helps determine which practices and technologies to pick, whether closed or open source best fits, and if this should be SaaS, PaaS, or IaaS-based.

Finally, there will always be an initial investment to update, upgrade, or migrate a company’s environment. But when done correctly, the number of hours to maintain a properly set-up environment will be significantly less than the initial investment of creating the environment. Especially when the people with the right skills were involved in the beginning, that do consider the scalability of the environment, the extensibility of the environment based on possible future needs, and security, you know that thing called vision.

Summary of all the above

Data, technologies, competence/knowledge, (cyber) security all require thought when considering Digital Sovereignty. In this blog, I attempted to highlight some technologies, architectures, and practices to consider when addressing these aspects. Some of my colleagues, when reading this blog, will tell me about the many topics I did not address, or could have a blog of their own. They would be correct, and they probably should (maybe they can help with this (insert evil laugh)). My goal was to address some of the nuances to consider when defining your company’s IT strategy, when putting on Digital Sovereignty ‘glasses’.

Addressing all these aspects is not easy, but crucial for a company to stay ahead, operate securely, and according to your countries regulations. At SUE, we created a tool called Multistax, which addresses many of the challenges mentioned in this blog. We have consultants who can help with the architecture, onboarding, and transition to a multi-cloud environment. Feel free to reach out to us for a chat!